Testing Kubernetes Operators using EnvTest

Yachika Ralhan

Yachika Ralhan  Rahul Sawra

Rahul Sawra A Kubernetes operator is a set of custom controllers that we deploy in the cluster implemented with CustomResourceDefinitions (CRDs). They listen for changes in the custom resources owned by them and perform specific actions like creating, modifying, and deleting Kubernetes resources.

If you want to read more about custom controllers and CustomResourceDefinition, please read this Kubernetes documentation.

Why would you make your own Kubernetes operator based system in the first place? It is because you are creating a platform. A platform is a software that we build other software on. The things we build on top of a platform matter; that is where real business value is. This means platforms should be our best tested software, and if we make Kubernetes operator based platforms, how do we test them? We will see in a bit.

You can refer to this post about Prometheus Operator to know more about use cases for Kubernetes operators.

Understanding Testing in Kubernetes

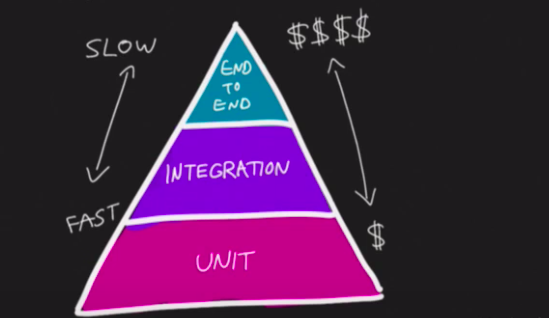

There are three basic forms of testing when it comes to software:

- End to End - as you can notice in the above diagram, the box is really small here because we don’t need coverage here; we just need to test the integration of all the different components.

- This is a really expensive operation because we connect to a real Kubernetes cluster with our custom controllers running in it.

- Unit Testing - testing individual units of application

- This is the cheapest operation, but achieving good test coverage for distributed systems can be challenging because it covers the individual units of systems.

- Integration Testing

- We don’t require an actual Kubernetes cluster for Integration tests (thanks to EnvTest) - which saves a huge amount of costs and compute resources.

- It tests how the application will behave when integrated with other parts of the solution stack. For example: when multiple controllers are registered with the manager.

Because of the challenges we saw in End to End and Unit tests, Integration tests have become more important. How we write our integration tests depends on how we built our controllers. We will use EnvTest with Ginkgo and Gomega to test our CRD based operators.

EnvTest - A Go library that helps write integration tests for your controllers by setting up and starting an instance of etcd and the Kubernetes API Server, without kubelet, controller-manager or other components.

Ginkgo is a testing framework for Go designed to help you write expressive tests. It is best paired with the Gomega matcher library. When combined, Ginkgo and Gomega provide a rich and expressive DSL (Domain-specific Language) for writing tests.

Gomega is a matcher/assertion library. It is best paired with the Ginkgo BDD test framework.

Testing Kubernetes Operators using EnvTest

We will use a sample operator based on the Operator SDK framework and will write integration test cases for it.Testing Kubernetes controllers is a big subject, and the boilerplate testing files generated for you by Kubebuilder/Operator SDK are fairly minimal. Creating the operator from scratch is out of the scope of this post. However, you can refer to the guide to build operators using Go.

This will be the hierarchy of the files in our setup:

└── controller/

├── memcached_controller.go

├── suite_test.go

└── memcached_controller_test.go

Sample Memcached CR:

apiVersion: cache.infracloud.io/v1alpha1

kind: Memcached

metadata:

name: memcached-sample

spec:

size:2

Here is the reconciler, which we will be testing. This reconciler creates a Memcached deployment if it doesn’t exist, ensures that the deployment size is the same as specified by the Memcached Custom Resource (CR) spec. And then updates the Memcached CR status using the status writer with the name of the pods.

func (r *MemcachedReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

log := ctrllog.FromContext(ctx)

// Fetch the Memcached instance

memcached := &cachev1alpha1.Memcached{}

err := r.Get(ctx, req.NamespacedName, memcached)

if err != nil {

if errors.IsNotFound(err) {

// Request object not found, could have been deleted after reconcile request.

// Owned objects are automatically garbage collected. For additional cleanup logic use finalizers.

// Return and don't requeue

log.Info("Memcached resource not found. Ignoring since object must be deleted")

return ctrl.Result{}, nil

}

// Error reading the object - requeue the request.

log.Error(err, "Failed to get Memcached")

return ctrl.Result{}, err

}

// Check if the deployment already exists, if not create a new one

found := &appsv1.Deployment{}

err = r.Get(ctx, types.NamespacedName{Name: memcached.Name, Namespace: memcached.Namespace}, found)

if err != nil && errors.IsNotFound(err) {

// Define a new deployment

dep := r.deploymentForMemcached(memcached)

log.Info("Creating a new Deployment", "Deployment.Namespace", dep.Namespace, "Deployment.Name", dep.Name)

err = r.Create(ctx, dep)

if err != nil {

log.Error(err, "Failed to create new Deployment", "Deployment.Namespace", dep.Namespace, "Deployment.Name", dep.Name)

return ctrl.Result{}, err

}

// Deployment created successfully - return and requeue

return ctrl.Result{Requeue: true}, nil

} else if err != nil {

log.Error(err, "Failed to get Deployment")

return ctrl.Result{}, err

}

// Ensure the deployment size is the same as the spec

size := memcached.Spec.Size

if *found.Spec.Replicas != size {

found.Spec.Replicas = &size

err = r.Update(ctx, found)

if err != nil {

log.Error(err, "Failed to update Deployment", "Deployment.Namespace", found.Namespace, "Deployment.Name", found.Name)

return ctrl.Result{}, err

}

// Ask to requeue after 1 minute in order to give enough time for the

// pods be created on the cluster side and the operand be able

// to do the next update step accurately.

return ctrl.Result{RequeueAfter: time.Minute}, nil

}

// Update the Memcached status with the pod names

// List the pods for this memcached's deployment

podList := &corev1.PodList{}

listOpts := []client.ListOption{

client.InNamespace(memcached.Namespace),

client.MatchingLabels(labelsForMemcached(memcached.Name)),

}

if err = r.List(ctx, podList, listOpts...); err != nil {

log.Error(err, "Failed to list pods", "Memcached.Namespace", memcached.Namespace, "Memcached.Name", memcached.Name)

return ctrl.Result{}, err

}

podNames := getPodNames(podList.Items)

// Update status.Nodes if needed

if !reflect.DeepEqual(podNames, memcached.Status.Nodes) {

memcached.Status.Nodes = podNames

err := r.Status().Update(ctx, memcached)

if err != nil {

log.Error(err, "Failed to update Memcached status")

return ctrl.Result{}, err

}

}

return ctrl.Result{}, nil

}

A few simple integration test cases could be:

- Once we create the Memcached deployment CR, it should create a k8s deployment.

- The replica size of the deployment should be the same as the Memcached CR spec size.

- If we update the Memcached CR spec size it should update the replica size of the deployment.

Operator SDK does the boilerplate setup and teardown of testEnv for you, in the ginkgo test suite that it generates under the /controllers directory

Configuring test suites using Ginkgo

Ginkgo supports suite-level setup and cleanup through two specialized suite setup nodes:

- BeforeSuite

- AfterSuite

BeforeSuite: Ginkgo will run our BeforeSuite closure at the beginning of the run phase - i.e., after the spec tree has been constructed but before any specs (test cases) have run. Following are the tasks that will be performed in the BeforeSuite. These will be executed only once.

- It creates a testEnv object which holds the EnvTest environment configuration for e.g., our Custom Resource directory path (path which contains all our CRDs).

- Starts the testEnv which will start the Kubernetes control plane.

- Adds our Custom APIs to the scheme.

- Creates a Kubernetes client so our test suite can use that to access testEnv API Server.

- Creates a new manager and registers our custom Memcache controller with it.

AfterSuite: AfterSuite closure will run after all the tests to tear down the setup and stop the testEnv Kubernetes server.

var _ = BeforeSuite(func() {

logf.SetLogger(zap.New(zap.WriteTo(GinkgoWriter), zap.UseDevMode(true)))

ctx, cancel = context.WithCancel(context.TODO())

By("bootstrapping test environment")

testEnv = &envtest.Environment{

CRDDirectoryPaths: []string{filepath.Join("..", "config", "crd", "bases")},

ErrorIfCRDPathMissing: true,

CRDInstallOptions: envtest.CRDInstallOptions{

MaxTime: 60 * time.Second,

},

}

cfg, err := testEnv.Start()

Expect(err).NotTo(HaveOccurred())

Expect(cfg).NotTo(BeNil())

err = cachev1alpha1.AddToScheme(scheme.Scheme)

Expect(err).NotTo(HaveOccurred())

k8sClient, err = client.New(cfg, client.Options{Scheme: scheme.Scheme})

Expect(err).NotTo(HaveOccurred())

Expect(k8sClient).NotTo(BeNil())

k8sManager, err := ctrl.NewManager(cfg, ctrl.Options{

Scheme: scheme.Scheme,

})

Expect(err).ToNot(HaveOccurred())

err = (&MemcachedReconciler{

Client: k8sManager.GetClient(),

Scheme: k8sManager.GetScheme(),

}).SetupWithManager(k8sManager)

Expect(err).ToNot(HaveOccurred())

//+kubebuilder:scaffold:scheme

go func() {

defer GinkgoRecover()

err = k8sManager.Start(ctx)

Expect(err).ToNot(HaveOccurred(), "failed to run manager")

}()

})

var _ = AfterSuite(func() {

cancel()

By("tearing down the test environment")

err := testEnv.Stop()

Expect(err).NotTo(HaveOccurred())

})

This is the part of suite_test.go file.

Now let’s start writing the integration tests

Ginkgo makes it easy to write expressive specs that describe the behavior of your code in an organized manner. Ginkgo suites are hierarchical collections of specs composed of container nodes, setup nodes, and subject nodes organized into a spec tree.

- Describe is the top-level container node used to organize the different aspects of code that we are testing hierarchically. Describe nodes are Container nodes that allow you to organize your specs/integration test. A Describe node’s closure can contain any number of Setup nodes (e.g., BeforeEach, AfterEach), and Subject nodes (i.e. It).

- Context is an alias for Describe node - it generates the exact same kind of Container node.

- We use setup nodes like BeforeEach to set up the state of our specs. In this case, we are initializing the custom resource object Memcached.

- Finally, we use subject nodes like It to write a spec that makes assertions about the subject under test. One It block is one integration test case.

var _ = Describe("MemcachedController", func() {

Context("testing memcache controller", func() {

var memcached *cachev1alpha1.Memcached

BeforeEach(func() {

memcached = &cachev1alpha1.Memcached{

ObjectMeta: metav1.ObjectMeta{

Name: "test-memcache",

Namespace: "default",

},

Spec: cachev1alpha1.MemcachedSpec{

Size: 2,

},

}

})

// Integration tests using It blocks are written here.

})

)}

- In the above BeforeEach node, we instantiate a Memcache CR object in the BeforeEach block with size 2.

- The BeforeEach node will run before each It block present in the same parent Context/Describe.

- Context Block will contain all the integration test cases, which we will cover in the below section. You can checkout the whole code on GitHub here.

Test Cases

Test case 1

When a custom resource Memcached is created in a cluster, the controller should create a corresponding deployment.

It("should create deployment", func() {

Expect(k8sClient.Create(ctx, memcached)).To(BeNil())

createdDeploy := &appsv1.Deployment{}

deployKey := types.NamespacedName{Name: memcached.Name, Namespace: memcached.Namespace}

Eventually(func() bool {

err := k8sClient.Get(ctx, deployKey, createdDeploy)

return err == nil

}, time.Second*10, time.Millisecond*250).Should(BeTrue())

})

- In the It block, we create the Memcache CR and expect the error to be

nil. Once we create a CR, the controller running should create a deployment. - We then do a

Getcall to the testEnv Kubernetes API Server to get the deployment in the Eventually block (it is like a retry block with a timeout) and expect it to get the deployment in that period; otherwise, it will fail.

Test case 2

Deployment created should have same number of replicas as defined by user in custom resource spec.

It("verify replicas for deployment", func() {

createdDeploy := &appsv1.Deployment{}

deployKey := types.NamespacedName{Name: memcached.Name, Namespace: memcached.Namespace}

Eventually(func() bool {

err := k8sClient.Get(ctx, deployKey, createdDeploy)

return err == nil

}, time.Second*10, time.Millisecond*250).Should(BeTrue())

Expect(createdDeploy.Spec.Replicas).To(Equal(&memcached.Spec.Size))

})

- We define the Namespaced key for the deployment we want to fetch.

- We then fetch the deployment created by the controller inside Eventually block.

- Eventually performs asynchronous assertions by polling the provided input. In the case of Eventually, Gomega polls the input repeatedly until the matcher is satisfied - once that happens the assertion exits successfully and execution continues. If the matcher is never satisfied, Eventually will time out with a useful error message. Both the timeout and polling interval are configurable.You can find more info here.

- Then we assert on number of replicas created for deployment to match

Memcached.Spec.Sizeusing Expect block.

Test case 3

In a similar way, using Gomega’s assertion functions test case 3 is written in which once a user updates a custom resource (here number of replicas), the corresponding deployment should be updated.

It("should update deployment, once memcached size is changed", func() {

Expect(k8sClient.Get(ctx, types.NamespacedName{Name: memcached.Name, Namespace: memcached.Namespace},

memcached)).Should(Succeed())

// update size to 3

memcached.Spec.Size = 3

Expect(k8sClient.Update(ctx, memcached)).Should(Succeed())

Eventually(func() bool {

k8sClient.Get(ctx,

types.NamespacedName{Name: memcached.Name, Namespace: memcached.Namespace},

memcached)

return memcached.Spec.Size == 3

}, time.Second*10, time.Millisecond*250).Should(BeTrue())

createdDeploy := &appsv1.Deployment{}

deployKey := types.NamespacedName{Name: memcached.Name, Namespace: memcached.Namespace}

Eventually(func() bool {

err := k8sClient.Get(ctx, deployKey, createdDeploy)

return err == nil

}, time.Second*10, time.Millisecond*250).Should(BeTrue())

Expect(createdDeploy.Spec.Replicas).To(Equal(&memcached.Spec.Size))

})

After writing all this code, you can run go test ./... (or you can use ginkgo cli) in your controllers/ directory to run the test cases.

You can check out the whole code base in our GitHub repository k8s-operator-with-tests.

Few tips from our experience

- EnvTest is a lightweight control plane only meant for testing purposes. This means it does not contain inbuilt Kubernetes controllers like deployment controllers, ReplicaSet controllers, etc. In the above example, you cannot assert/verify for pods being created or not for created deployment.

- You should generally try to use Gomega’s Eventually to make asynchronous assertions, especially in the case of Get and Update calls to API Server.

- Use ginkgo

--until-it-failsto identify flaky tests.

That’s all for this post. This blog post is co-written by Yachika and Rahul. If you are working with Kubernetes Operators or plan to use it and need some assistance, feel free to reach out to Yachika and Rahul Sawra. We’re always excited to hear thoughts and feedback from our readers!

Looking for help with Kubernetes adoption or Day 2 operations? do check out how we’re helping startups & enterprises with our Kubernetes consulting services and capabilities.

References

Stay updated with latest in AI and Cloud Native tech

We hate 😖 spam as much as you do! You're in a safe company.

Only delivering solid AI & cloud native content.