Should You Always Use a Service Mesh?

The service mesh has become popular lately, and many organizations seem to jump on the bandwagon. Promising enhanced observability, seamless microservice management, and impeccable communication, service mesh has become the talk of the town. But before you join the frenzy, it’s crucial to pause and reflect on whether your specific use case truly demands the adoption of a service mesh.

In this blog post, we will try to scratch the surface to view beyond the service mesh hype. We’ll explore the factors you should consider when determining if it is the right fit for your architecture. We’ll navigate the complexities of service mesh adoption, dissect the advantages and drawbacks, and determine whether it’s a necessary addition to your technology stack or just a passing trend.

So, let’s dive in and explore the service mesh from a neutral standpoint.

What is service mesh?

Let’s first cover the basics of what service mesh is, the advantages and challenges that come with it, and then jump onto the part where we explore use cases where service mesh shines and where you can get around without one.

A service mesh serves as a dedicated infrastructure layer designed to abstract away the complexities associated with service-to-service communication within a microservices architecture. Typically, it comprises a set of lightweight network proxies, commonly referred to as sidecars, which are deployed alongside each service in the cluster. These sidecars play a pivotal role in managing communication, providing features such as service discovery, load balancing, traffic management, and security.

However, it’s important to acknowledge the evolving landscape of service mesh technologies. Recent advancements, such as Istio’s Ambient Mesh and Cilium, have introduced alternative deployment options that extend beyond the traditional sidecar model. These technologies offer the flexibility to operate as pods within a daemonset, presenting an alternative approach to orchestrating service-to-service communication and enhancing security.

In essence, these proxies act as intermediaries, facilitating seamless inter-service communication while creating room for the implementation of essential features to optimize microservices deployments.

To know more about service mesh, how it works, use cases, benefits, challenges, and popular service mesh out there, you can read this detailed blog on service mesh.

Advantages of service mesh

Besides simplifying and enhancing communication management between services in a distributed system, service mesh also adds multiple features to the network, including:

-

Observability & monitoring: Service mesh offers valuable insights into the communication between services and effective monitoring to help in troubleshooting application errors.

-

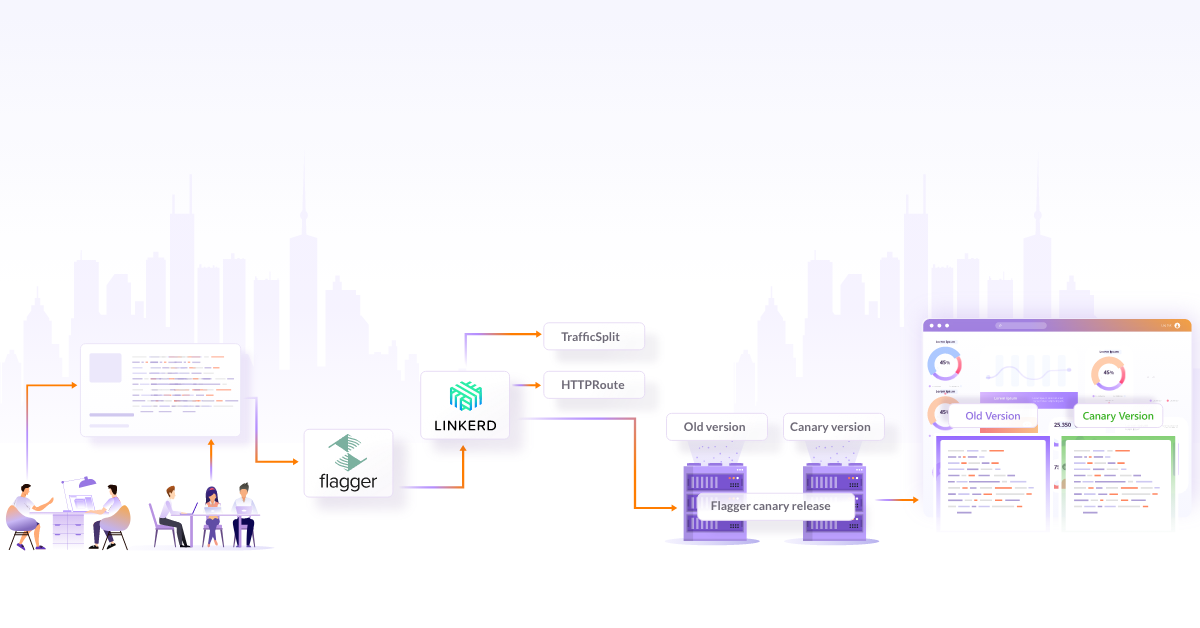

Traffic management: Service mesh offers intelligent request distribution, load balancing, and support for canary deployments. These capabilities enhance resource utilization and enable efficient traffic management.

-

Resilience & reliability: By handling retries, timeouts, and failures, service mesh contributes to the overall stability and resilience of services, reducing the impact of potential disruptions.

-

Security: Service mesh enforces security policies, and handles authentication, authorization, and encryption – ensuring secure communication between services and eventually, strengthening the overall security posture of the application.

-

Service discovery: With service discovery features, service mesh can simplify the process of locating and routing services dynamically, adapting to system changes seamlessly. This enables easier management and interaction between services.

-

Microservices communication: Adopting a service mesh can simplify the implementation of a microservices architecture by abstracting away infrastructure complexities. It provides a standardized approach to manage and orchestrate communication within the microservices ecosystem.

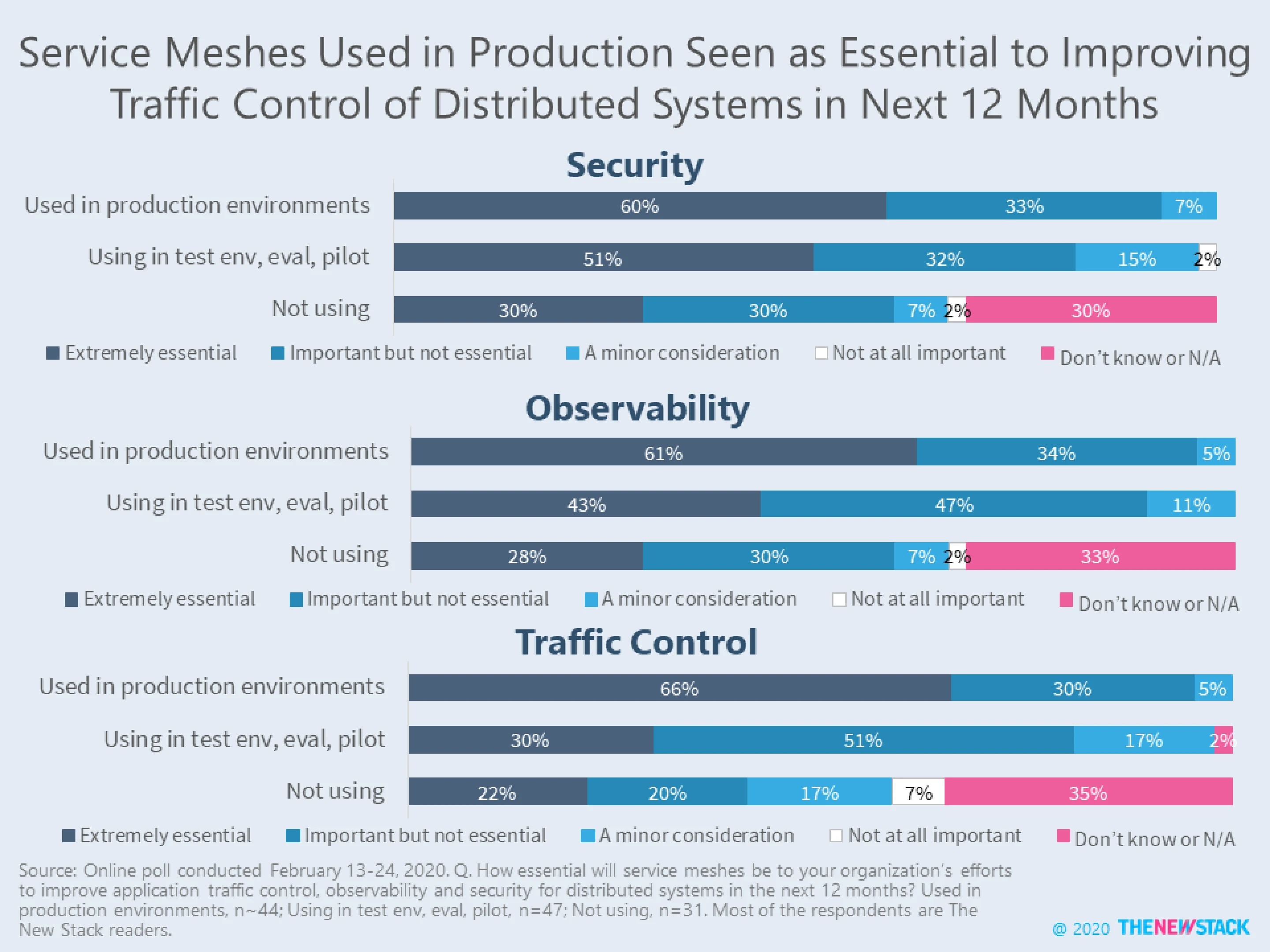

A survey by TheNewStack revealed that security and observability are extremely essential to improving distributed systems operation.

Among companies using service mesh in production Kubernetes environments:

- 60% consider service mesh crucial for enhancing application traffic control in distributed systems

- 30% regard service mesh as important for enhancing application traffic control in distributed systems

- 51% of those exploring service mesh perceive it as essential for improving security

- 43% share the same sentiment for observability

These statistics indicate that security and observability are recognized as key technologies addressing the significant challenges faced by the DevOps and site reliability engineering communities.

Drawbacks and challenges of service mesh

While service mesh offers significant advantages, it also comes with certain drawbacks and challenges:

-

Complexity: When considering a service mesh, be aware that it introduces complexity to the infrastructure stack. Configuration, management, and operation can be challenging, particularly for organizations with limited expertise. You have to invest adequate resources and time to address the learning curve associated with implementation and maintenance.

-

Performance overhead: Introducing a service mesh may lead to performance overhead due to the interception and routing of network traffic. Proxies and the associated data plane can impact latency and throughput. Thorough planning and optimization of the service mesh architecture are essential to minimize potential performance impacts.

-

Operational overhead: Using service mesh requires ongoing operational management. There are many tasks including updating, scaling, monitoring, and troubleshooting mesh components that demand additional effort and resources from the operations team. You have to assess the team’s capacity to handle this operational overhead before adopting a service mesh.

-

Increased network complexity: The introduction of a service mesh adds complexity to the network infrastructure. This can affect troubleshooting, and debugging, and necessitate adjustments to existing configurations and security policies. You must evaluate the impact of increased network complexity when making decisions about adopting a service mesh.

-

Compatibility and Interoperability: Service mesh might not be compatible with various infrastructure components and tools within your ecosystem. You have to consider the effort required for integration with existing platforms, frameworks, and monitoring systems, as it may involve additional configuration and customization. Achieving interoperability across different cloud providers, container runtimes, and orchestrators may present challenges that need to be addressed.

-

Vendor lock-in: Some solutions may come with vendor-specific features and dependencies, limiting flexibility and portability across environments. You have to take into account the potential vendor lock-in associated with specific service mesh implementations. We advise you to assess the long-term implications and evaluate trade-offs before committing to a particular service mesh solution.

-

Increased complexity for developers: Adopting a service mesh means adding complexity for developers. They will need to familiarize themselves with the service mesh infrastructure and associated tools, increasing their cognitive load (which will increase the demand for Platform Engineering). There could be an impact on the development workflow and you have to make adjustments according to application architectures and deployment processes.

Addressing these challenges requires careful planning, expertise, and ongoing maintenance. It’s important to evaluate the specific needs of your organization and assess whether the benefits of a service mesh outweigh the drawbacks in your particular use case. Let’s see how you can evaluate your use case to identify the need for a service mesh.

Evaluating your use case

When evaluating whether a service mesh is suitable for your project, you can consider the following factors:

-

Microservices architecture: Service mesh is particularly beneficial in complex microservices architectures where services need to communicate with each other. If your application consists of multiple services that need to interact and require advanced traffic management, security, and observability, a service mesh can provide significant value.

-

Scaling and performance requirements: If your application requires efficient load balancing, traffic shaping, and performance optimizations, a service mesh can help. Service mesh offers features like circuit breaking, request retries, and distributed tracing, which can improve the resilience and performance of your services.

-

Security and compliance: When it comes to applications dealing with sensitive data or requiring strong security measures, a service mesh can enforce security policies, handle encryption, and provide mutual TLS authentication. It offers consistent security controls across services, reducing the risk of unauthorized access and data breaches.

-

Observability and troubleshooting: A service mesh can provide observability features like metrics and distributed tracing in case you need detailed insights into the behavior and performance of your services. This can simplify troubleshooting, performance optimization, and capacity planning.

-

Complex networking requirements: If your application requires complex networking configurations, such as service discovery, routing, and traffic splitting across multiple environments or cloud providers, a service mesh can help streamline these tasks and abstract away the underlying complexity.

-

Operational scalability: You can use a service mesh to provide a centralized control plane to manage and automate the operational aspects of service-to-service communication if you anticipate a growing number of services or frequent updates and deployments.

-

Existing infrastructure and tooling: Consider the compatibility of the service mesh with your existing infrastructure components, container runtimes, orchestration platforms, and monitoring systems. It would be smart to evaluate whether the service mesh integrates smoothly with your technology stack or if it requires additional configuration and customization efforts.

-

Resource availability and expertise: Finally, assess the availability of resources and expertise within your organization to deploy, operate, and maintain a service mesh. If you don’t have the necessary skills and capacity to handle the complexity associated with a service mesh, it will be an additional investment of resources.

It’s essential to carefully evaluate your specific requirements, priorities, and constraints before deciding to adopt a service mesh as it is a long-term and heavy expenditure. You can conduct a proof-of-concept or pilot project to assess how well a service mesh aligns with your use case and whether it provides tangible benefits in terms of scalability, performance, security, and operational efficiency.

Scenarios where service mesh shine

There are some situations where service mesh fits best. Some of these scenarios are:

-

Large-scale microservice deployments: Service mesh is highly beneficial in large-scale microservice architectures where numerous services need to communicate with each other. They provide a centralized control plane for managing service-to-service communication, enabling seamless interactions and simplifying the complexity of managing a large number of services.

-

Multi-cloud and hybrid cloud environments: Service mesh is well-suited for scenarios where applications span multiple cloud providers or hybrid cloud environments. They offer consistent networking, security, and observability across different infrastructure environments, ensuring a unified experience for services regardless of the underlying cloud infrastructure.

-

Complex network topologies: Service mesh excels in managing complex network topologies, especially when services need to communicate across various network boundaries, such as across data centers or regions. They provide the necessary abstraction and routing capabilities to simplify inter-service communication, regardless of the underlying network complexities.

-

Compliance and regulatory requirements: Service mesh is advantageous in environments that have stringent compliance and regulatory requirements. They offer robust security features, such as authentication, authorization, and encryption, ensuring that communication between services meets the necessary compliance standards. Service mesh also provides observability features that aid in compliance audits and monitoring.

There are many service mesh out there and you might be confused about which one to use, you can check out our CNCF landscape navigator which can help you choose the right service mesh for your needs.

When not to use a service mesh?

Service mesh is not always a good option to go with. It is possible that they can introduce more hassle to the system. Let’s see real-life use cases where you might consider not using a service mesh in an application that requires extremely low latency or real-time processing capabilities.

Real-time analytics pipeline: In a real-time analytics pipeline, data is continuously streamed from multiple sources, processed in near real-time, and analyzed for insights. This type of application typically requires high data throughput and minimal processing latency to ensure timely analysis and decision-making. In such a scenario, introducing a service mesh with its additional layer of proxies and routing may introduce unnecessary overhead and potential bottlenecks in the data flow. The focus of the application is on efficiently processing and analyzing the streaming data, rather than managing complex service communication patterns.

Instead of a service mesh, alternative lightweight networking solutions like message queues, event-driven architectures, or specialized stream processing frameworks (explored later in the blog) can be used to optimize the data flow and ensure minimal latency. These solutions are often designed specifically for high-throughput data streaming scenarios and can provide better performance and scalability compared to a service mesh.

Legacy enterprise application: If you are working with a legacy enterprise application that predates the microservices era and lacks the necessary architectural components for service mesh integration, trying to retrofit a service mesh may not be feasible. Instead, you can focus on other modernization efforts or consider containerization without a service mesh.

Resource-constrained IoT devices: In Internet of Things (IoT) scenarios where devices have limited resources (CPU, memory, network), adding a service mesh can introduce too much overhead (undesirable amount of additional complexity, resource consumption, or operational burden). In such cases, simple lightweight communication protocols may be more suitable.

Service mesh alternatives

In our previous discussions, we explored scenarios where service meshes might not be the ideal solution. However, what if you desire to leverage the benefits of a service mesh without actually implementing one? Fortunately, there exist alternative tools that offer comparable functionalities without the added overhead and complexities of a service mesh.

In this section, we will delve into these alternative options, examining their capabilities and exploring how they can fulfill your requirements effectively.

-

API gateways: API gateways act as a single entry point for external clients to access services. They provide features like authentication, authorization, request routing, rate limiting, and caching. While API gateways primarily focus on client-facing APIs, they can also handle some inter-service communication aspects, especially in monolithic or simple microservices architectures.

-

Service proxy: A service proxy is a lightweight intermediary component that sits between services and handles communication between them. It can provide load balancing, circuit breaking, and traffic management capabilities without the extensive feature set of a full-fledged service mesh. Service proxies like Envoy and HAProxy are commonly used for these purposes.

-

Ingress controllers: Ingress controllers provide traffic routing and load balancing for incoming external traffic to a cluster or set of services. They handle the entry point into the system and can offer features like SSL termination, path-based routing, and request filtering. Ingress controllers like Nginx Ingress Controller and Traefik are popular choices.

-

Message brokers: Message brokers facilitate asynchronous communication between services by decoupling senders and receivers. They provide reliable message queuing, publish-subscribe patterns, and event-driven architectures. Message brokers like Apache Kafka, RabbitMQ, and AWS Simple Queue Service (SQS) are commonly used for reliable messaging.

-

Distributed tracing systems: Distributed tracing systems help monitor and trace requests as they propagate through a distributed system. They provide insights into request flows, latency analysis, and troubleshooting. Tools like Jaeger, Zipkin, and AWS X-Ray can be used for distributed tracing.

-

Custom networking solutions: Depending on the specific requirements and constraints of your application, it may be more suitable to develop custom networking solutions tailored to your needs. This approach allows for fine-grained control and optimization of service communication while avoiding the additional complexity introduced by a service mesh.

It’s important to note that these alternatives may not offer the same comprehensive feature set as a service mesh, but they can address specific aspects of service communication and provide lightweight solutions for specific use cases. The choice of an alternative depends on the specific requirements, architecture, and trade-offs you are willing to make in your application ecosystem.

Do I need a service mesh?

Making the decision to adopt a service mesh involves careful evaluation and consideration of various factors. Here are some key steps to help you in your decision-making process:

-

Weighing the pros and cons: You can begin by understanding the advantages and drawbacks of the service mesh, as well as their potential impact on your application architecture and development process. Assess how the benefits align with your specific needs and whether the drawbacks can be mitigated or outweighed by the advantages.

-

Conducting a cost-benefit analysis: Evaluate the costs, both in terms of implementation effort and ongoing operational overhead, associated with adopting a service mesh. There would be hidden costs like a learning curve for your team, the need for additional infrastructure resources, and the impact on application performance. You have to analyze these costs against the expected benefits and determine if the investment is justified.

-

Consulting with your team and stakeholders: Having a discussion with your development team, operations team, and other relevant stakeholders can help you to gather their perspectives and insights. Understand their requirements, concerns, and existing pain points related to service communication and management.

-

Considering future scalability and flexibility: Any changes you make today, would have an effect on the future. So you must carefully evaluate the upcoming scalability and flexibility requirements of your application. Service mesh solution should accommodate your expected growth, handle increased traffic, and support evolving needs. Consider whether the service mesh architecture is flexible enough to adapt to changes in your application’s requirements or if it may introduce unnecessary constraints.

-

Assessing existing networking capabilities: Assess whether your existing networking infrastructure, along with available solutions, can effectively meet your service communication and management requirements. Examine if your current setup can adequately address security, observability, and traffic management aspects, or if adopting a service mesh could offer substantial enhancements.

-

Piloting and testing: At the end of the day, one can run a pilot project or proof of concept (PoC) to evaluate the impact of a service mesh in a controlled environment. This can help validate the benefits, test the integration with your existing infrastructure, and identify any unforeseen challenges before fully committing to a service mesh implementation.

It’s essential to align the decision with your organization’s goals and ensure that the adoption of a service mesh aligns with your long-term architectural vision and growth plans. That’s why you should not rush in, and take your time to reach any decision.

Service Mesh Decision Questionnaire

Here are several questions that you may ask yourself to have a comprehensive perspective when deciding for and against the service mesh.

- Is my application architecture microservices-based?

- Do I require advanced traffic management and routing capabilities?

- Is observability and monitoring critical for my application?

- Do I have a highly distributed or multi-cloud environment?

- Is security a top concern for my application?

- Am I prepared to invest in the additional complexity and learning curve?

- Can I allocate sufficient resources for service mesh implementation and maintenance?

If the answer to any of the questions is “yes,” it is advisable to consider using a service mesh. However, if the answer is “no” for any of the questions, it is recommended to explore the alternative options provided earlier.

Conclusion

It’s important to recognize that service mesh adoption may not be universally applicable. Factors such as application complexity, performance requirements, team size, available resources, existing networking solutions, and compatibility with legacy systems should be carefully considered. In some cases, alternative solutions like API gateways, service proxies, ingress controllers, message brokers, or custom networking solutions may be more appropriate.

Ultimately, the decision to adopt a service mesh should align with your organization’s goals, architectural vision, and specific use case requirements. Each application and infrastructure is unique, and it’s crucial to evaluate the trade-offs and consider the long-term implications before deciding on the adoption of a service mesh.

By taking a thoughtful and informed approach, you can determine whether a service mesh is the right choice for your application and leverage its capabilities to enhance the management, security, and observability of your distributed system. Once you decide whether service mesh fits your specific requirements or not, you may need external support to get started with its adoption. For that, you can check our service mesh consulting capabilities.

Thank you for reading this post, I hope you found it informative and engaging. To stay updated with similar content, consider subscribing to our newsletter for a weekly dose of cloud-native insights. I value your thoughts and would love to connect with you on Twitter or LinkedIn to initiate a conversation and hear your feedback on this post.

Stay updated with latest in AI and Cloud Native tech

We hate 😖 spam as much as you do! You're in a safe company.

Only delivering solid AI & cloud native content.