Machine Learning on Kubernetes

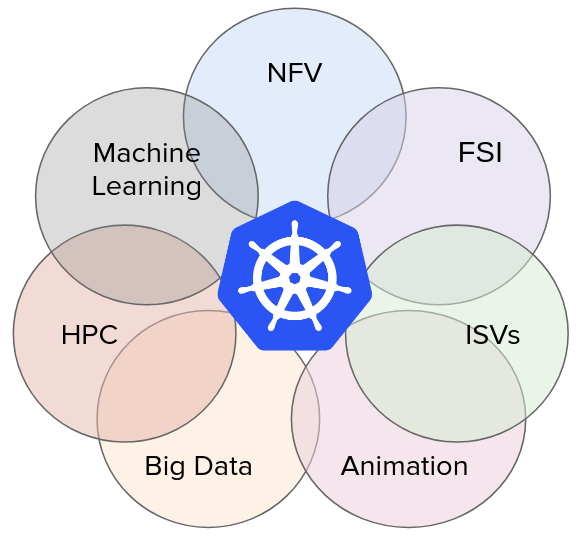

With the rise of containers, the problems of orchestration became more relevant. Over last few years various projects and companies tried to address the challenge in their ways but Kubernetes came out as a strong and dominant platform to run containers. Today most companies are running/planning to move to Kubernetes as a platform for running various workloads - be it stateless microservices, cron jobs or stateful workloads such as databases. Though these workloads only represent a small portion of computing workloads in the real world. For example, there are workloads which need specialized hardware like GPU. The Resource management working group exactly focuses on this area and work towards aligning project and technologies so that more diverse kind of workloads run on Kubernetes platform.

Image Source: https://kubernetes.io/blog/2017/09/introducing-resource-management-working/

When I read Abhishek Tiwari’s post on big data workloads on Kuberentes, I was intrigued by the amount of work that has happened. This post tries to explore running machine learning/deep learning workloads on Kubernetes as a platform and various projects and companies trying to address this area. One of the key requirements for running ML workloads on Kubernetes is support for GPU. Kubernetes community has been building support for GPUs since v1.6 and the link in Kubernetes documentation has details.

Why Kubernetes?

Before we dive deeper into various projects and efforts on enabling ML/DL on Kubernetes, let’s answer the question - why Kubernetes? Kubernetes offers some advantages as a platform for example:

* A consistent way of packaging an application (container) enables consistency across the pipeline - from your laptop to the production cluster.

* Kubernetes is an excellent platform to run workloads over multiple commodity hardware nodes while abstracting away the underlying complexity and management of nodes.

* You can scale based on demand - the application as well as the cluster itself.

* Kubernetes is already a well-accepted platform to [run microservices](/monolith-microservices-modernization/) and there are efforts underway for ex. to run serverless workloads on Kubernetes. It would be great to have a single platform which can abstract the underlying resources and make it easy for the operator to manage the single platform.

RAD Analytics

Some of the early efforts in enabling intelligent applications on Kubernetes/OpenShift was done by Redhat in form RAD analytics. The early focus of the project was to enable Spark clusters on Kubernetes and this was done by Oshinko projects. For example combination of the oshinko-cli and other projects can be used to deploy Spark cluster on Kubernetes. Tutorials on the website are full of various examples. you can also track progress on various projects on the website.

Paddle from Baidu

Baidu open sourced it’s deep and machine learning platform Paddle (PArallel Distributed Deep LEarning) written in Python in September 2016. It then announced that Paddle can run on Kubernetes in Feb 2016. Paddle can be used for image recognition, natural language processing as well as for recommendation systems. Paddle uses Kubernetes native constructs such as jobs to run pieces of training and finish the job when training run. It also runs trainer pods and scales them on need basis so that the workload can be distributed effectively.

Commercial options

There are companies which provide the commercial or open source with limited capability software for running machine learning on Kubernetes, for example, Seldon has a open source core but requires commercial license beyond a certain scale. Microsoft has run machine learning on its Kubernetes offering with technology from Litbit as explained here. RiseML is a startup which provides a machine learning platform which runs on Kubernetes.

Kubeflow

Announced in Kubecon 2017 at Austin, Kubeflow is an open source project from Google which aims to simplify running machine learning jobs on Kubernetes. Initially, version supports Jyputer notebooks and TensorFlow jobs, but the eventual goal is to support additional open source tooling used for machine learning on Kubernetes. Kubeflow wants to be a toolkit which simplifies deploying and scaling machine learning by using tools of user’s choice.

Conclusion

A data scientist focused on solving machine learning problems may not have all expertise/time to build a scalable infrastructure required to run large-scale jobs. Similarly, an infrastructure engineer who can build scalable infrastructure may not be a master of machine learning. This theme is visible in all projects that we explored - bridge the gap between the two so we can run large ML/DL programs on a scalable infrastructure. Although there is work to be done, this is a great start and something to look forward with excitement in 2018 and beyond!

Looking for help with Kubernetes adoption or Day 2 operations? do check out how we’re helping startups & enterprises with our managed services for Kubernetes.

Stay updated with latest in AI and Cloud Native tech

We hate 😖 spam as much as you do! You're in a safe company.

Only delivering solid AI & cloud native content.