How Docker is disrupting traditional Continuous Delivery ?

The first decade of the 21st century has been an interesting one when you look at the evolution of infrastructure automation and the patterns and practices in continuous integration and delivery. Companies such as Chef (Founded 2008) and Puppet Labs (Founded 2005) lead the evolution in early phases of infrastructure automation.

Although Jenkins has been around for some time and has been widely used for continuous integration, continuous delivery has picked up a little later. Jez Humble’s book “Continuous Delivery” which is like a Bible on the topic was published in 2010 and you can clearly see the rise of CD tools around after the same time in the enterprise.

Consider, for example, IBM’s acquisition of UrbanCode platform in 2013, CA’s acquisition of Nolio in 2013, Xebia Labs founded in 2008 and so on. This was also time of rise of PaaS platforms albeit with mixed level of success - CloudFoundry, GAE App Engine, DotCloud, Heroku and many more such organisations and projects trying to make software delivery more efficient.

But a parallel development was happening in 2013 which would disrupt all of the players like IBM and CA - a disruption so fundamental that it would completely alter the way software is developed and deployed.

In case you haven’t guessed it yet, it was the year when Docker was born. Since then we have come a long way and containers is an accepted way of deploying applications today. In fact, projects such as Kubernetes, Mesos, Swarm are leading the evolution and creating new rules to operate containers at scale.

But then what happens to configuration management based CD pipelines which used Chef cookbooks/Puppet modules and workflows designed in Nolio? This is the question we are trying to look at in this post.

Evolution of Configuration Management and Continuous Deployment Tools

For deploying any application the two things are fundamentally essential - the application code and the runtime or platform on which to run the application.

The workflow and orchestration of various components was solved by the user interface (UI) based or Domain Specific Language (DSL) based continuous deployment (CD) tools and underlying infrastructure provisioned by associated configuration management tools.

The infrastructure code are based on some DSL and one would write cookbooks/playbooks for various stacks/runtimes needed for running an application. In a fairly complex enterprise setup, the number of cookbooks needed to setup runtime for a given application can be many.

“DevOps”- are we there yet?

Based on our experience of configuration management projects, what started off as a effort to unify the Development(Dev) and Operations(Ops) has not necessarily solved all problems it had aimed to solve. Let’s take some concrete examples

**Managing dependencies: Now for Infrastructure **

Configuration frameworks employ a DSL syntax to write modules, cookbooks, formula or runbooks as they are called. For a fairly complex enterprise, these runbooks would easily run into hundreds, if not more.

Now to compose an application you might need more than one runbooks and managing the dependencies and their versions is the new problem at hand.

Some novel solutions like Berkshelf in Chef were implemented to manage dependencies. But this meant another set of tools to be learnt and implemented. Naturally developers had a steep learning curve before they could start contributing runbooks. This also led to some companies creating special “DevOps” teams - which is counter intuitive to the idea of DevOps and an anti-pattern in itself.

**Infrastructure as Code **

Writing and debugging infrastructure code is far more different than programming for application. It may not be fair to call one more difficult than the other but writing infrastructure code has certain challenges. For example lack of mature tooling or need of fine-grained knowledge of underlying infrastructure makes it a tough job to write infrastructure code. Also the errors dumped by failing dependent application packages are cryptic and you sometimes start to feel like navigating a ship without a map.

And often setting up the application from scratch is a lot wiser approach than debugging the problem and a quick fix is need of the hour.

No, we are not there yet!

The evolution of infrastructure automation tools did help a great deal in enabling self serviceable infrastructure but that did not solve the problem of silos.

Specialized knowledge related to infrastructure meant at least a part of team had to deal with underlying infrastructure.

The problem just shifted from one team to another team and from one way of doing things to another - DSL to use for infrastructure automation. For developers, infrastructure still remained an elusive land where you had to know the nuts and bolts to be proficient.

At a fundamental level - the application was still packaged as it was packaged, for example as a Jar, Gem or Py package; and the platform was created by a separate tooling and packaging format.

Rise of unifying force - Docker (Containers)

One of the achievement of containers and Docker is the uniform packaging of application and infrastructure needs. Regardless of whether you are running the application locally or on a production cluster - you still have same Docker image.

With Docker, the application is finally self-contained. You can package the application code as well as infrastructure dependency in a simple declarative manner. This also means an easy-to-adopt technology from a developer’s perspective. The container is almost like a golden image but much more light-weight and layered, such that layers can be cached. Once the developer is done, he can simply push the image to a registry. From the operations perspective too, this is a much simpler format - you don’t need long documents or cookbooks that were needed to run container image. All you need is a container image and some metadata like environment variables and that’s about it.

With Docker lot of configuration management code is replaced by Docker images and Dockerfile. This also simplifies application dependency management as there is no point in writing tonnes of code for application configuration which is hardly going to change.

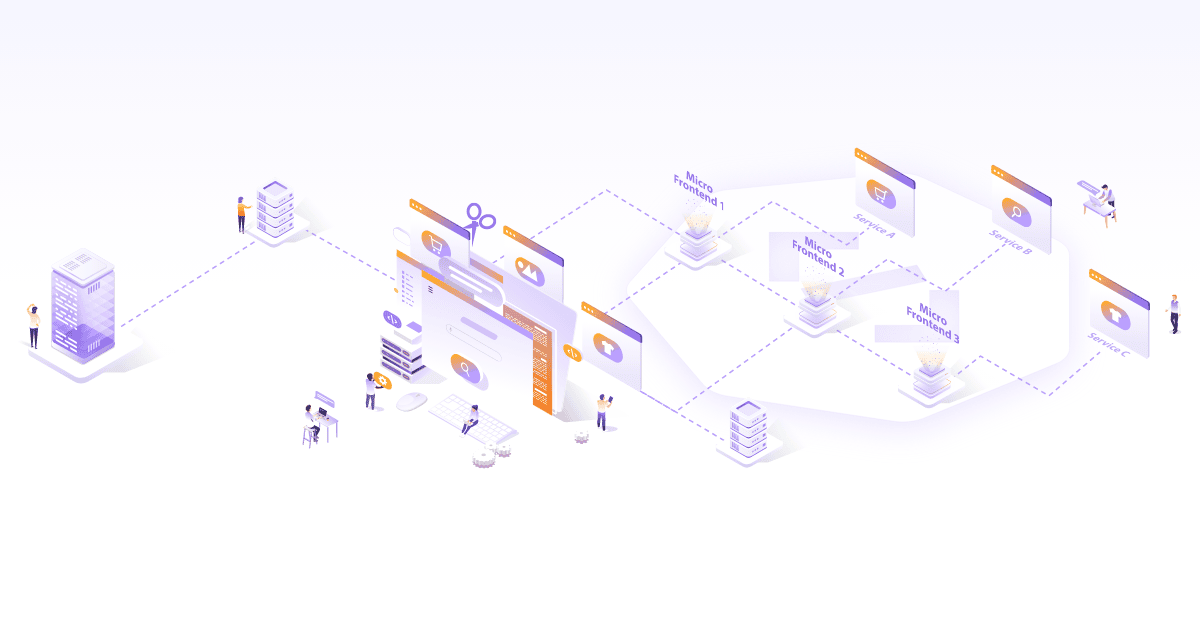

From an operability perspective, now operations can really focus on building a platform which could run containers and managing the platform. For creating and maintaining the platform you can use configuration management platforms in combination with new platforms meant for containers. This has also given rise to container orchestrators such as Mesos, Kubernetes, Swarm, and container platforms such as Rancher, OpenShift etc.

Nirvana at last?

Does that mean by adopting containers we have solved all problems of software delivery? Certainly not. But we have moved a step closer to application portability, self-sustaining CD pipeline which will truly realize the goals of DevOps.

However there are newer challenges like, how to orchestrate containers on a set of large set of virtual machines.How does one service discover another when a container is ephemeral and can move from one machine to another? Or how do you manage persistent storage with containers moving around.

I will discuss these areas in detail in the forthcoming parts of this post. Stay tuned!

Looking for help with your cloud native journey? do check out how we’re helping startups & enterprises with our cloud native consulting services and capabilities to achieve the cloud native transformation.

Stay updated with latest in AI and Cloud Native tech

We hate 😖 spam as much as you do! You're in a safe company.

Only delivering solid AI & cloud native content.