How to Build Your Own MCP Server

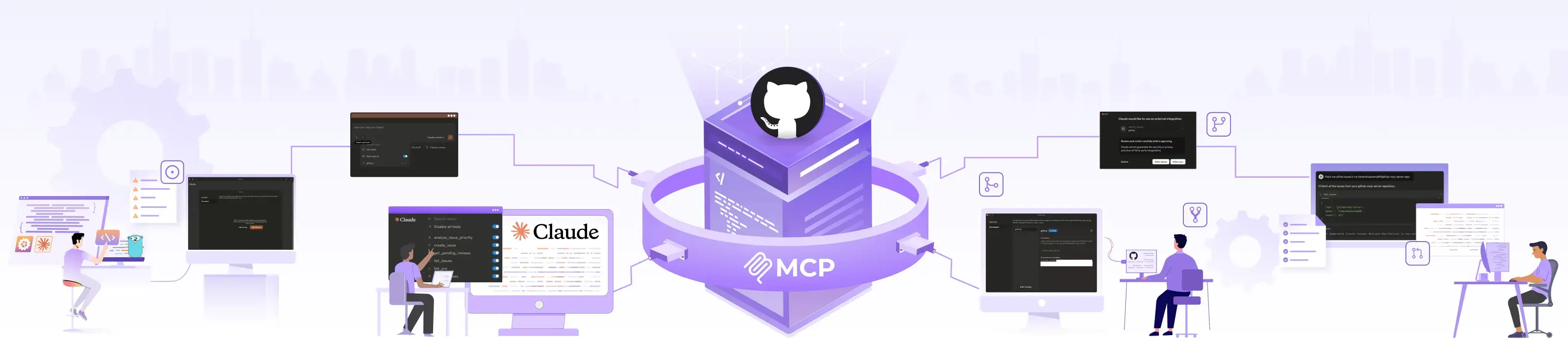

In our previous blog post on Model Context Protocol (MCP), we explored the MCP, a lightweight JSON-RPC interface that standardizes communication between large language models (LLMs) and external data sources and tools through a client-server architecture. We discussed what MCP is and why it matters for LLM integration.

Now, let’s get hands-on. In this blog post, we’ll dive into the practical side, how to build and run an MCP server using open source tools, focusing on real-world implementation patterns and best practices for creating production-ready integrations.

Understanding the MCP server

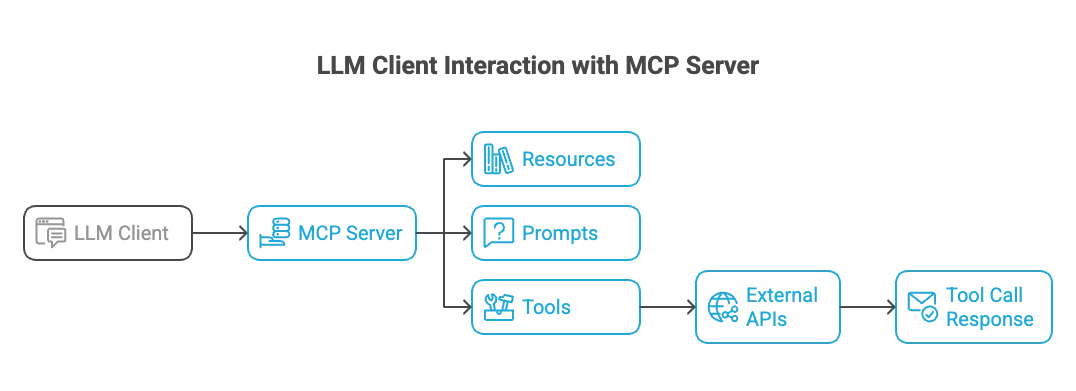

Understanding the technical foundation of MCP servers is crucial before diving into implementation. An MCP server acts as a middleware layer that exposes external capabilities to LLMs through a standardized protocol.

What is an MCP server?

An MCP server is a standalone process that implements the Model Context Protocol specification, acting as a bridge between LLMs and external systems. Technically, it’s a JSON-RPC 2.0 server that:

- Exposes tools: Functions that LLMs can invoke to perform actions (API calls, data retrieval, computations)

- Manages resources: Provides access to external data sources like files, databases, or web APIs

- Handles prompts: Offers templated prompts that can be dynamically populated with context

- Maintains state: Can hold conversation context and manage sessions between requests

The server communicates over standard transport layers (stdin/stdout, HTTP, WebSockets) using a well-defined protocol that ensures type safety and error handling.

MCP Request/Response Shape

MCP communication follows the JSON-RPC 2.0 specification. In our previous blog post, we covered the full request flow, including how MCP works.

Here, we’ll focus specifically on the tool call request/response shape:

Tool Call Request

{

"jsonrpc": "2.0",

"id": "unique_request_id",

"method": "call_tool",

"params": {

"tool": "tool_name",

"arguments": {

"param1": "value1",

"param2": "value2"

}

}

}

Tool Call Response

The corresponding response format includes either successful results or error information:

{

"jsonrpc": "2.0",

"id": "unique_request_id",

"result": {

"content": [

{

"type": "text",

"text": "Tool execution result"

}

]

}

}

The standardized format ensures consistent communication patterns across different MCP implementations and enables robust error handling through the JSON-RPC error response mechanism. Notably, MCP draws inspiration from established protocols like the Language Server Protocol (LSP), which also relies on JSON-RPC. The shared foundation promotes compatibility and familiarity for developers who have worked with modern tooling architectures.

Tool registration and metadata

Tool registration is the process of defining and exposing capabilities to the MCP client. Each tool requires comprehensive metadata, including:

- Name: Unique identifier used to invoke the tool, like a function name in Python or JavaScript. It must be unique within the server, but also clear and predictable so the LLM can reason about it effectively..

- Description: Human-readable explanation of what the tool does

- Parameters: Schema-validated input parameters with types, requirements, and descriptions

- Input Schema: JSON Schema validation for parameters

The metadata covers how the LLM understands and interacts with the tool. The name and description should be optimized for AI reasoning. A well-named and clearly described tool increases the likelihood that the LLM will select and use it correctly during inference.

The registration process typically involves defining input parameters with types, descriptions, and validation rules. This schema-driven approach enables static analysis and prevents runtime errors from invalid parameter combinations. Agents like Obot, Claude Desktop, and other MCP-compatible orchestrators can use metadata to reason about tool selection and usage.

Prompt templates and data objects

MCP servers can also expose prompt templates and structured data objects beyond just tools. Prompt templates allow servers to provide reusable prompts with variable substitution, while data objects enable servers to expose structured information that LLMs can query and manipulate.

Here’s a simple prompt template example:

template := mcp.NewPromptTemplate("summarize_prs",

mcp.WithPromptDescription("Summarize pull requests for a repository"),

mcp.WithPromptArgument("repo_data", "Repository PR data to summarize"),

)

Setting up a minimal MCP server

Building an MCP server means carefully designing tools, handling errors clearly, and communicating effectively with clients that consume the server’s APIs. We’ll use the MCP-GO library for its robust implementation and active community support. In the following example, we will demonstrate building a custom GitHub MCP server that can list pull requests and issues, create issues, and more.

Source code: https://github.com/infracloudio/github-mcp-server

Prerequisites

- Golang 1.19 or later

- GitHub Personal Access Token with appropriate permissions

- Claude Desktop

Installation

-

Clone the repository.

git clone <REPO> cd github-mcp-server -

Install the dependencies.

go mod tidy -

Set the environment variable.

export GITHUB_TOKEN="your_github_token_here"

Replace "your_github_token_here" with your actual GitHub Personal Access Token.

Code breakdown

The project is structured as follows:

github-mcp-server/

├── go.mod

├── go.sum

├── main.go // Main server logic, tool registration

└── tools/

└── github.go // Business logic for GitHub API interaction

Server initializarion

The MCP server starts by defining its identity and capabilities. We create a server instance with basic configuration and register our tools.

s: = server.NewMCPServer(

"GitHub MCP Server", // Server name

"0.1.0", // Server version

server.WithToolCapabilities(false), //Disable streaming for simplicity

)

How does disabling streaming help?

Disabling streaming keeps the server logic straightforward: one request, one response. This results in cleaner, well-structured JSON output and simpler handler implementations.

Tool definitions

Each tool represents a specific action the server can perform. Here’s what our GitHub server provides:

List Pull Requests Tool (list_prs)

Retrieves pull requests from a specified GitHub repository with optional state filtering. This tool expects owner and repo as required string parameters, and an optional state string parameter.

listPRsTool := mcp.NewTool("list_prs",

mcp.WithDescription("List pull requests in a GitHub repository"),

mcp.WithString("owner", // Parameter name

mcp.Required(), // Makes this parameter mandatory

mcp.Description("GitHub org or user"), // Parameter description

),

mcp.WithString("repo",

mcp.Required(),

mcp.Description("GitHub repository name"),

),

mcp.WithString("state",

mcp.Description("State of PRs to list (open, closed, all). Defaults to open"),

),

)

List Issues Tool (list_issues)

Fetches issues from a GitHub repository with optional state filtering. Similar to listPRsTool, this is a tool that lists issues with owner, repo, and optional state parameters.

listIssuesTool := mcp.NewTool("list_issues",

mcp.WithDescription("List issues in a GitHub repository"),

// ... parameters similar to list_prs ...

)

Create Issue Tool (create_issue)

Creates a new issue in a GitHub repository with optional metadata like labels and assignees.

createIssueTool := mcp.NewTool("create_issue",

mcp.WithDescription("Create a new issue in a GitHub repository"),

mcp.WithString("owner", mcp.Required(), mcp.Description("GitHub organization or user (e.g., 'microsoft')")),

mcp.WithString("repo", mcp.Required(), mcp.Description("GitHub repository name (e.g., 'vscode')")),

mcp.WithString("title", mcp.Required(), mcp.Description("Title of the new issue")),

mcp.WithString("body", mcp.Description("Body content of the new issue (optional)")),

mcp.WithString("labels", mcp.Description("Comma-separated list of labels to add (e.g., 'bug,documentation') (optional)")),

mcp.WithString("assignees", mcp.Description("Comma-separated list of GitHub usernames to assign (e.g., 'user1,user2') (optional)")),

)

We have focused on the three core tools above to keep things simple. However, the full repo includes additional tools implementation like:

-

search_issues: Search for issues using keywords. -

get_pending_reviews: List pull requests that are waiting for review. -

analyze_issue_priority: Estimate the priority of issues based on their content.

In real-world scenarios, MCP servers often expose many more tools based on the systems they integrate with and the workflows they support.

Tool Registration and server startup

After defining tools, we register them with handler functions and start the server:

s.AddTool(listPRsTool, listOpenPRsHandler)

s.AddTool(listIssuesTool, listOpenIssuesHandler)

s.AddTool(createIssueTool, createIssueHandler)

if err := server.ServeStdio(s); err != nil {

fmt.Printf("Server error: %v\n", err)

}

This associates the defined tools (listPRsTool, listIssuesTool,createIssueTool) with their respective handler functions (listOpenPRsHandler, listOpenIssuesHandler, createIssueHandler).

Handler implementation pattern

Each tool follows a consistent handler pattern that processes requests and delegates to business logic:

Handler functions receive tool calls and follow this pattern: parse arguments, call business logic, format response, and handle errors gracefully.

func listOpenPRsHandler(ctx context.Context, req mcp.CallToolRequest) (*mcp.CallToolResult, error) {

// 1. Marshal arguments:

// The input arguments (req.Params.Arguments) are initially a map.

// They are marshaled into JSON so they can be unmarshaled into a specific struct

// in the business logic layer (tools.GetOpenPRs).

raw, err := json.Marshal(req.Params.Arguments)

if err != nil {

return nil, errors.New("failed to marshal arguments")

}

// 2. Delegate to business logic:

// Calls the actual function that interacts with the GitHub API.

prList, err := tools.GetOpenPRs(ctx, raw) // raw is json.RawMessage

if err != nil {

return nil, err // Propagate errors from the business logic

}

// 3. Handle empty results:

if len(prList) == 0 {

return mcp.NewToolResultText("No open pull requests found."), nil

}

// 4. Format response:

// Constructs a string output from the list of PRs/issues.

var output string

for _, pr := range prList { // or issue for listOpenIssuesHandler

output += fmt.Sprintf("- #%d: %s\n", pr.GetNumber(), pr.GetTitle())

}

return mcp.NewToolResultText(output), nil // Returns the result as a simple text response

}

Business logic separation

The GitHub API interactions are separated into the tools package, keeping the server logic clean and maintainable.

GitHubClient setup

Authentication and client initialization are handled through environment variables:

var GitHubClient = func(ctx context.Context) *github.Client {

token := os.Getenv("GITHUB_TOKEN") // Retrieves the token from environment variable

ts := oauth2.StaticTokenSource(&oauth2.Token{AccessToken: token})

tc := oauth2.NewClient(ctx, ts)

return github.NewClient(tc) // Returns an authenticated GitHub API client

}

API integration functions

The core logic for each tool is implemented in dedicated functions under tools/github.go. These functions handle specific GitHub operations with proper error handling and default values:

GetOpenIssues function: Connects to GitHub API, retrieves issues based on parameters, and returns structured data.

func GetOpenIssues(ctx context.Context, input json.RawMessage) ([]*github.Issue, error) {

var params ToolInput

if err := json.Unmarshal(input, ¶ms); err != nil {

return nil, err

}

client := GitHubClient(ctx)

if params.State == "" {

params.State = "open"

}

issues, _, err := client.Issues.ListByRepo(ctx, params.Owner, params.Repo, &github.IssueListByRepoOptions{

State: params.State,

ListOptions: github.ListOptions{PerPage: 100},

})

if err != nil {

return nil, err

}

// Filter out pull requests (GitHub API returns PRs as issues)

var actualIssues []*github.Issue

for _, issue := range issues {

if !issue.IsPullRequest() {

actualIssues = append(actualIssues, issue)

}

}

return actualIssues, nil

}

GetOpenPRs function: Fetches pull requests from GitHub with state filtering and pagination support.

CreateIssue function: Creates new GitHub issues with optional labels and assignee assignment.

This handler pattern provides consistency across different tools while keeping the codebase organized.

This foundation demonstrates how to build MCP servers that integrate with external APIs while maintaining clean architecture and proper error handling. The pattern scales well for adding additional tools and integrating with other services.

Building and deploying

Thorough testing is crucial for MCP servers, especially when they interact with external systems like GitHub. This involves unit tests for individual functions, integration tests for tool handlers, and end-to-end testing with an MCP client like Claude Desktop.

Setting up for Claude Desktop integration

The GitHub MCP Server can be integrated with Claude Desktop for interactive testing and use.

-

Build the server binary:

For the GitHub MCP server, compile the Go program to produce an executable binary.

# For GitHub MCP Server cd /path/to/your/github-mcp-server go build -o bin/github-mcp-server main.goYou can also download the build file directly from the repo.

Ensure the binary has execute permissions (chmod +x bin/<server_binary>).

-

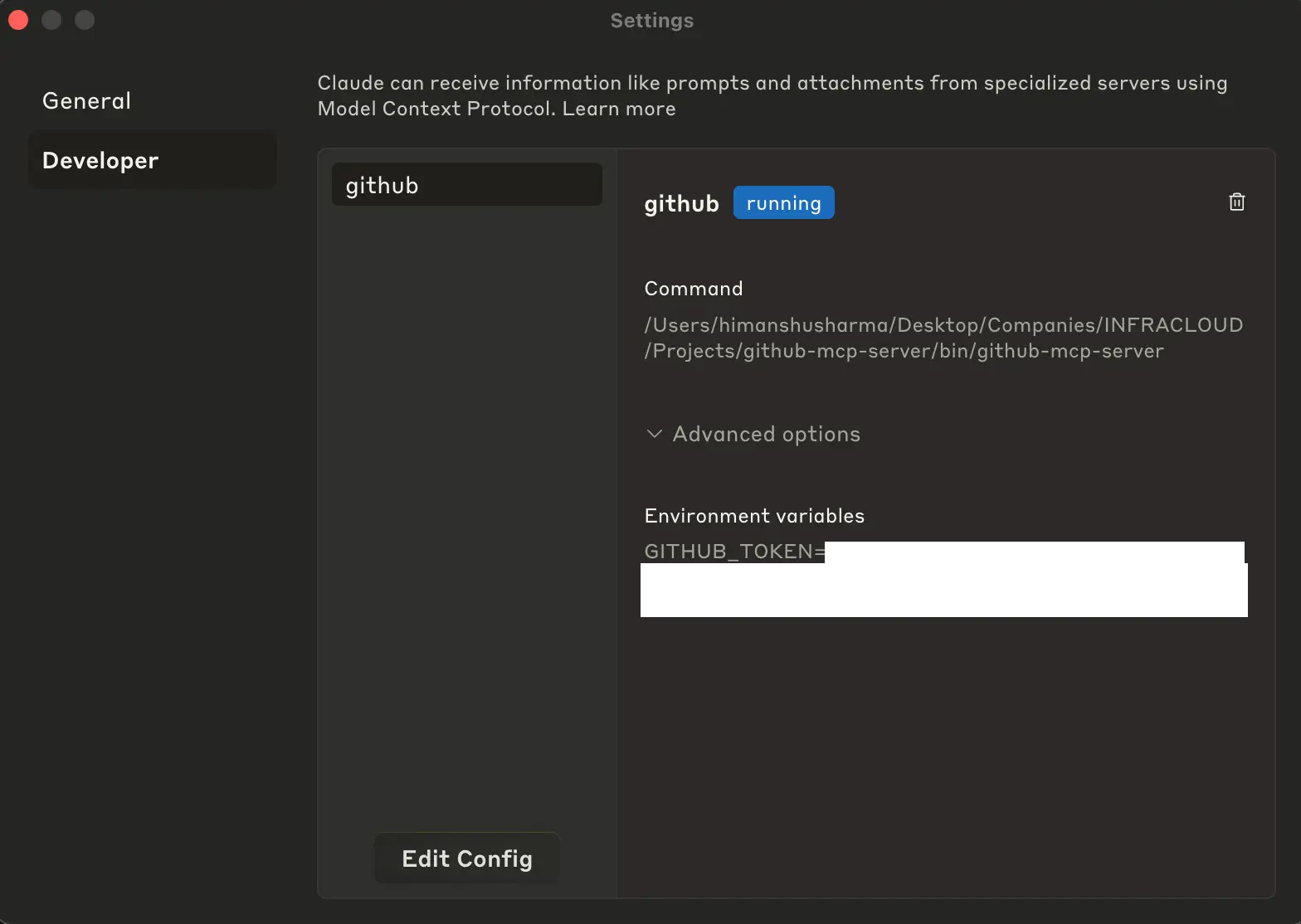

Configure Claude Desktop:

To set up Claude Desktop for use with your MCP Server, refer to the official Claude documentation on starting with Model Context Protocol for detailed instructions across platforms.

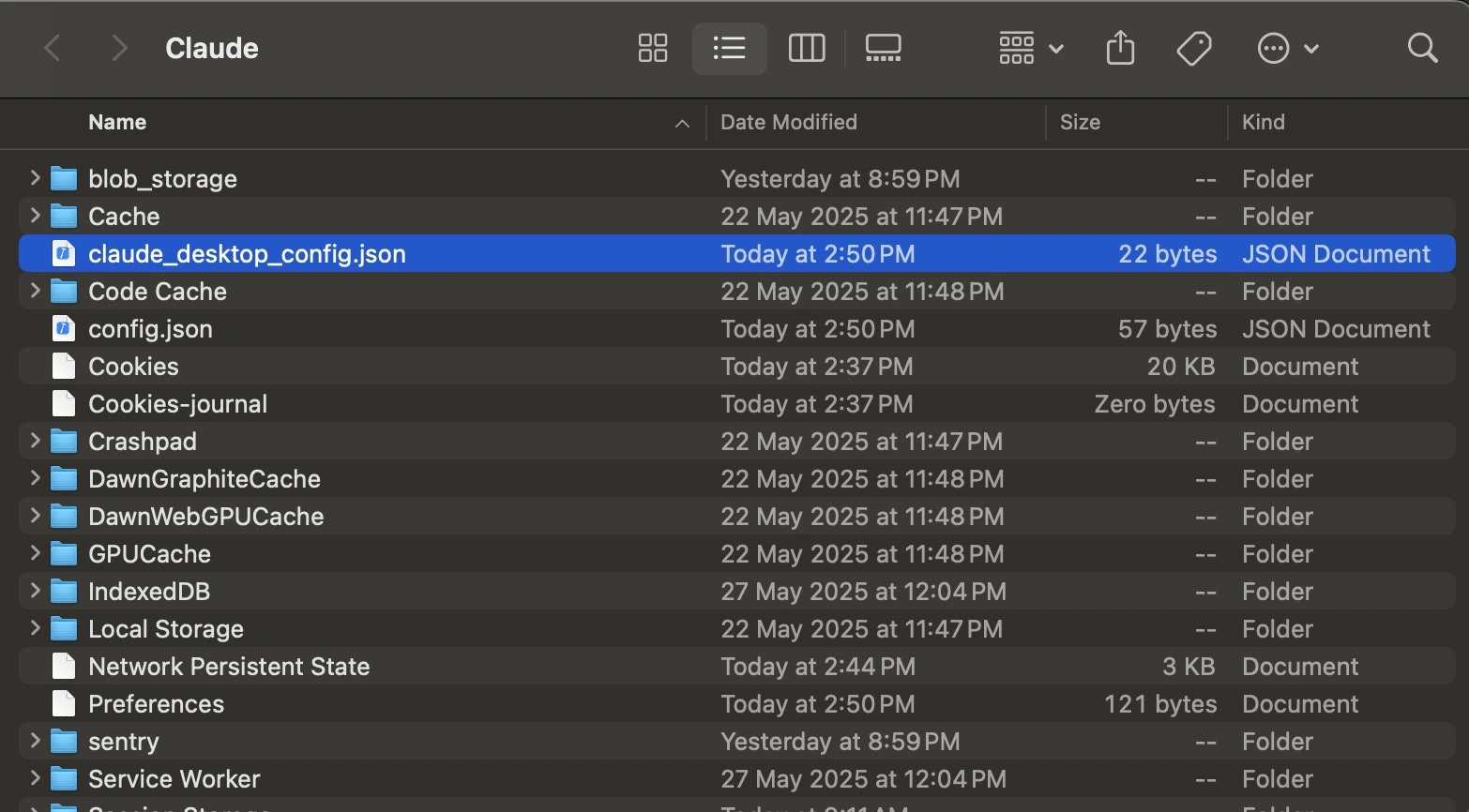

Edit the Claude Desktop configuration file (e.g., ~/Library/Application Support/Claude/claude_desktop_config.json on macOS).

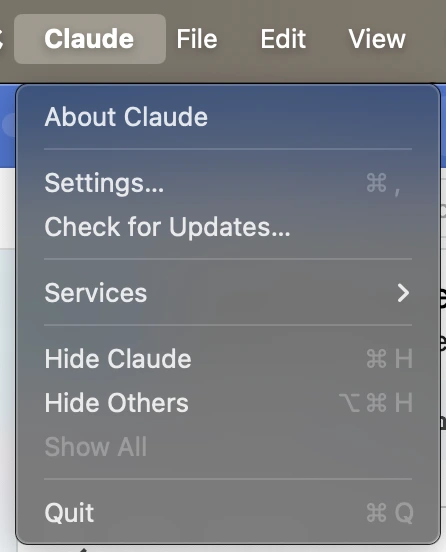

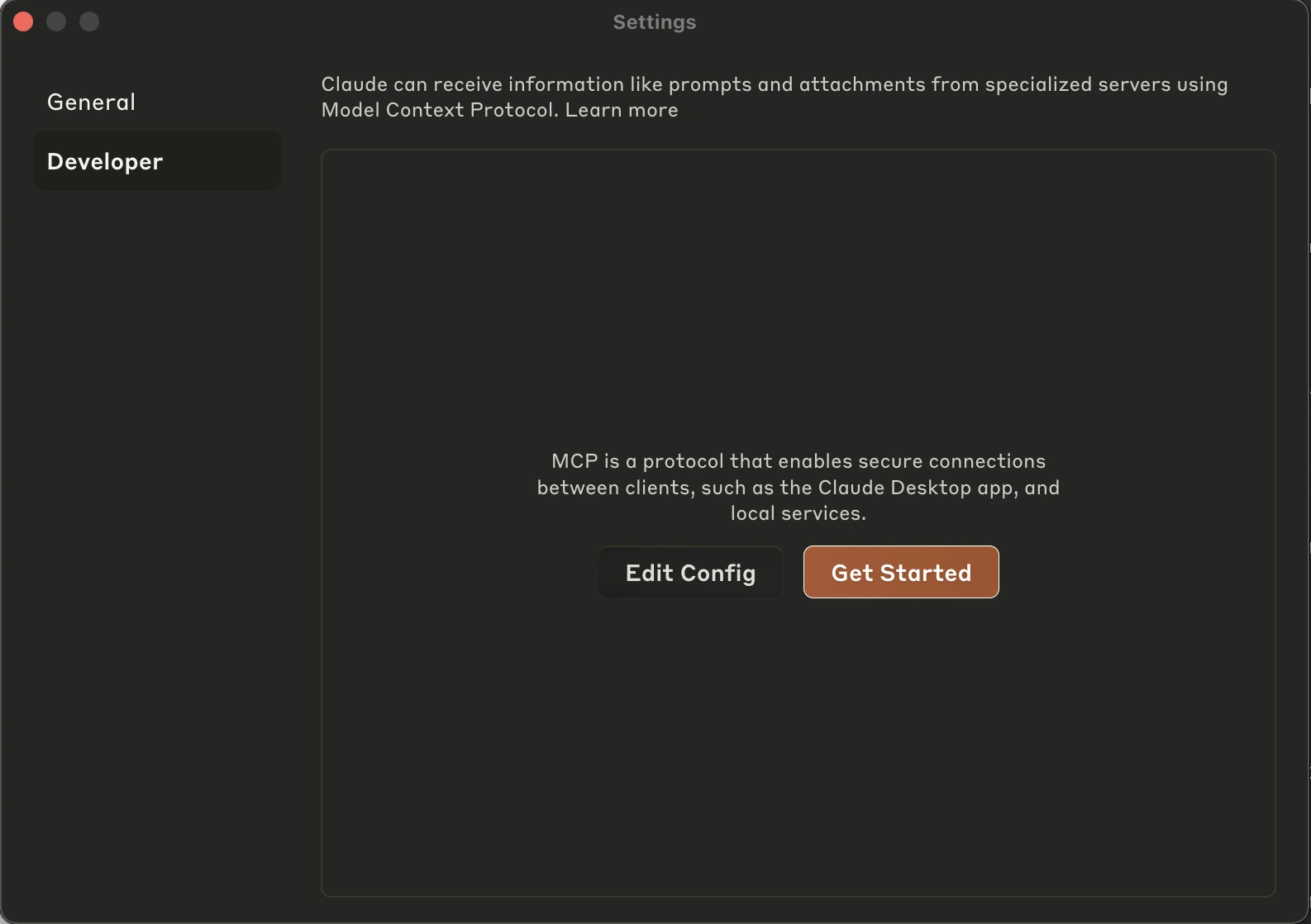

In MacOS, to do this, go to Claude Desktop’s Settings.

Open the Developer tab from the side panel. Click the Edit Config to open the file.

Add entries for your MCP servers within the mcpServers object.

{ "mcpServers": { "github": { "command": "/path/to/your/github-mcp-server", "env": { "GITHUB_TOKEN": "your_actual_github_personal_access_token" } } } }Replace paths with actual absolute paths and ensure GITHUB_TOKEN is correct.

-

Restart Claude Desktop: For changes to take effect.

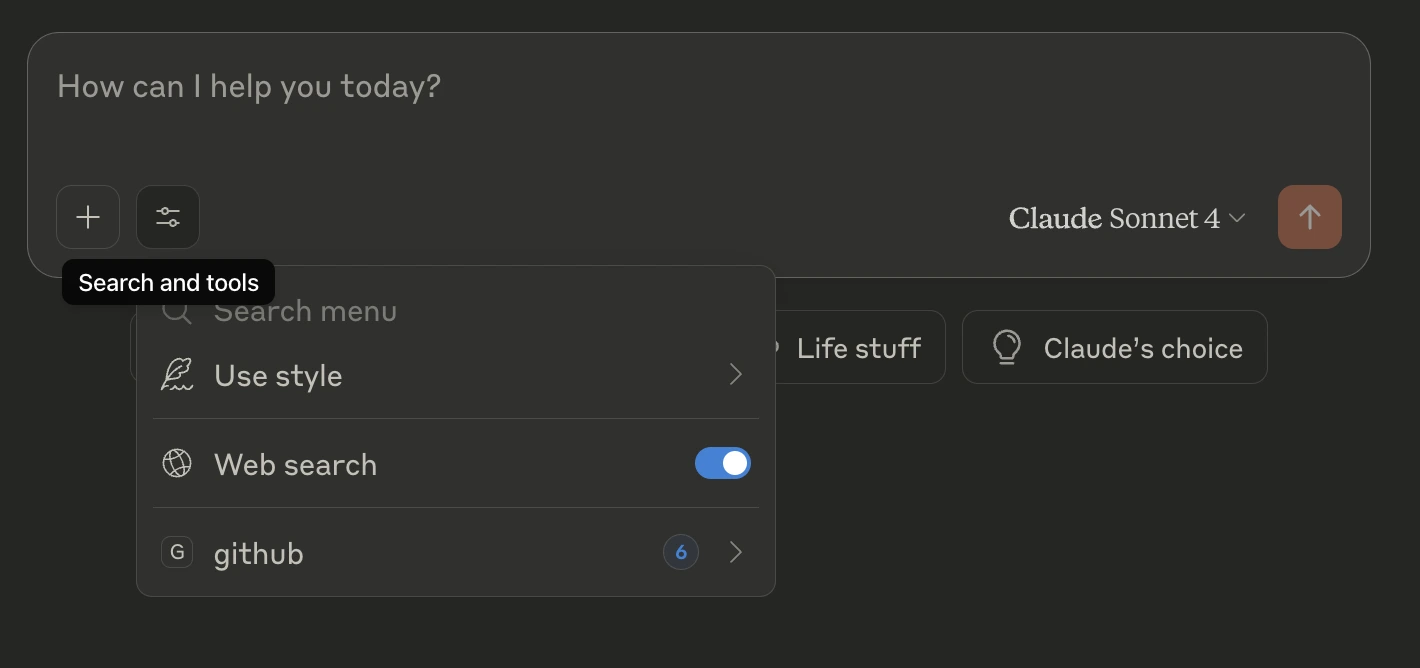

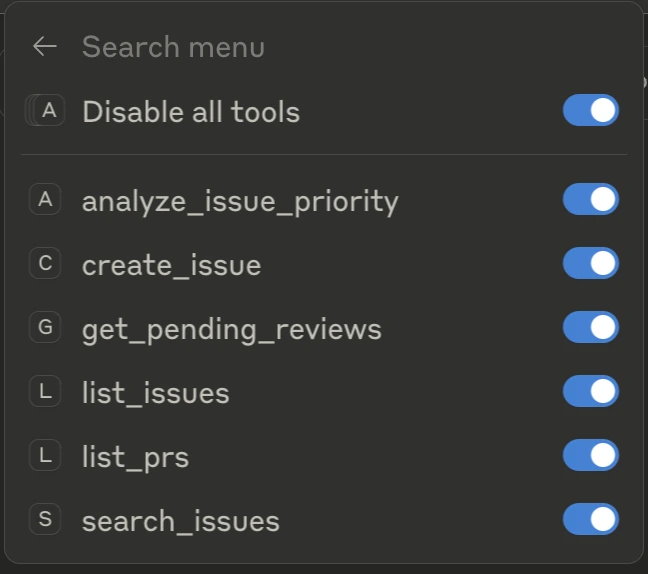

Once it restarts, look for a slider icon in the bottom-left corner of the prompt input box. Clicking this icon will open the tool toggle panel, where you can see all the MCP tools registered with Claude.

Click the GitHub link to view all the options and functions that GitHub MCP can perform.

Note that the repository includes additional tools beyond what’s shown here. You can explore the repo for a full list of supported operations and add or disable tools as needed from the interface.

You can also go back to Developer Settings of Claude Desktop to check the configuration:

Let’s now go ahead and test it.

Testing MCP servers with Claude Desktop

Once the configuration is complete, you can interact with your MCP servers using natural language prompts like on Claude Desktop:

- “List all open PRs in gptscript-ai/gptscript”

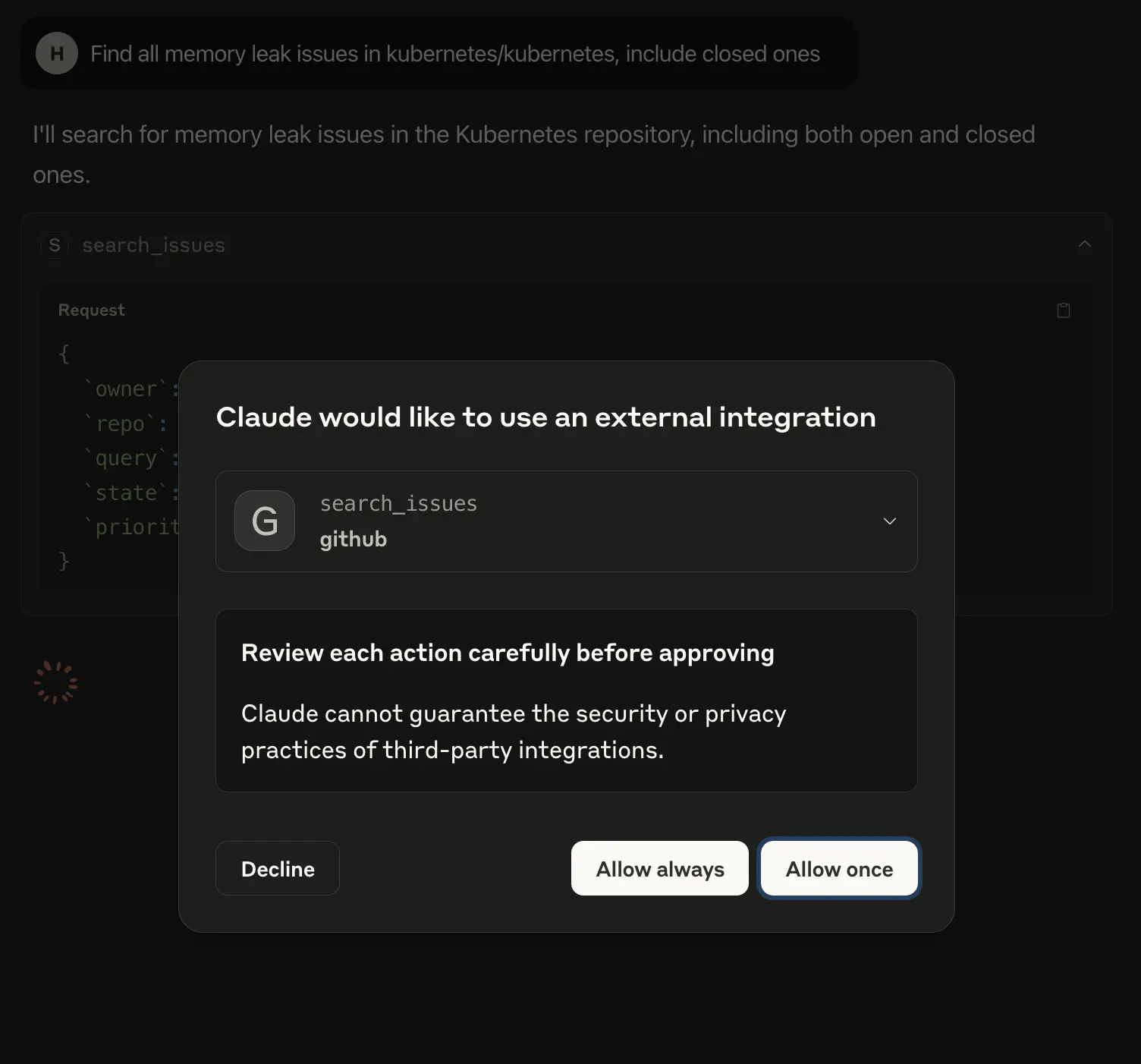

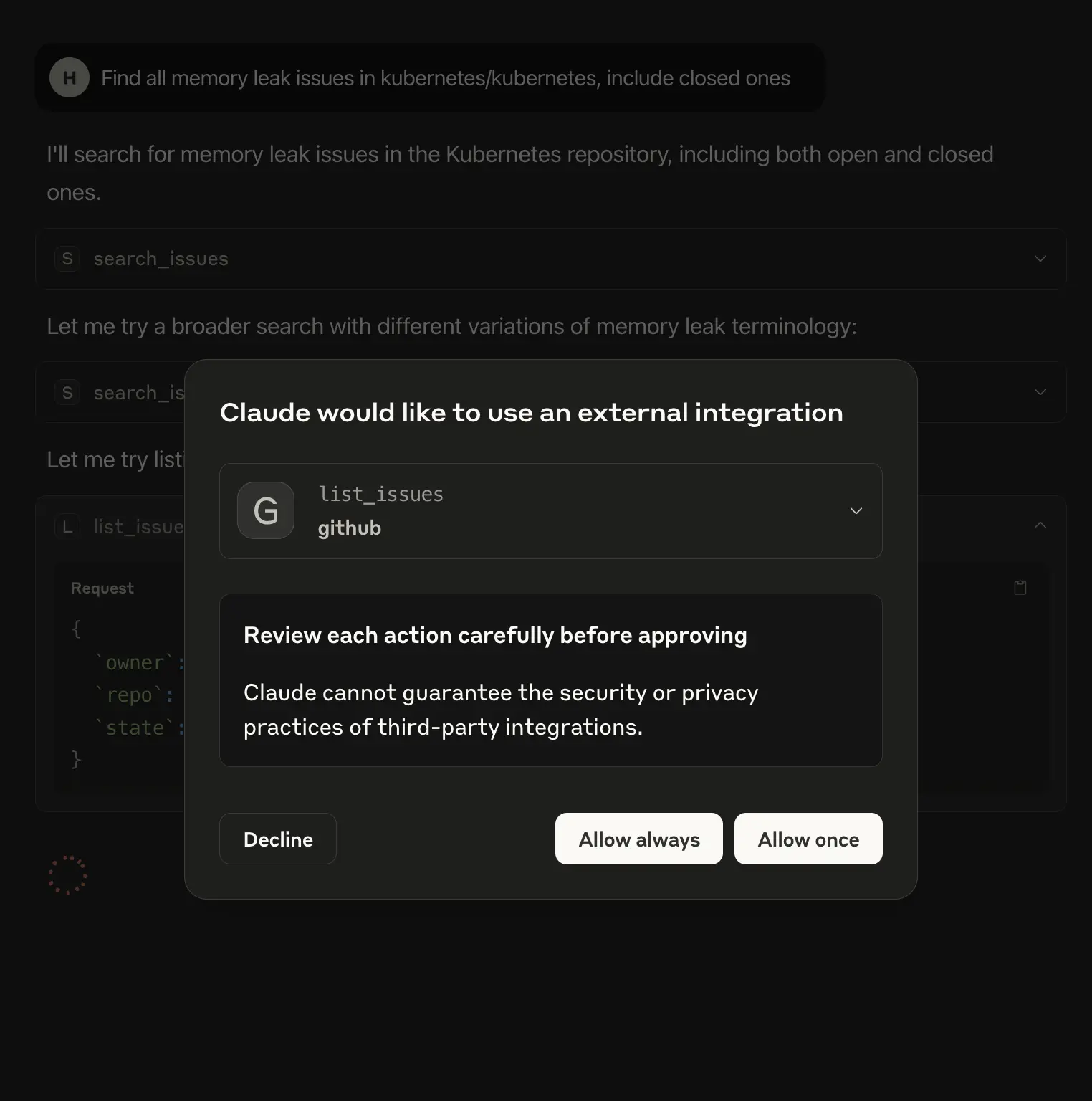

- “Find all memory leak issues in kubernetes/kubernetes, including closed ones”

- “Create a GitHub issue in the kubernetes/kubernetes repo about flaky tests in the CI pipeline”

Claude Desktop will route the requests to your local MCP servers using the tools/call method and return structured responses.

Claude’s desktop will ask for access to perform all these tasks.

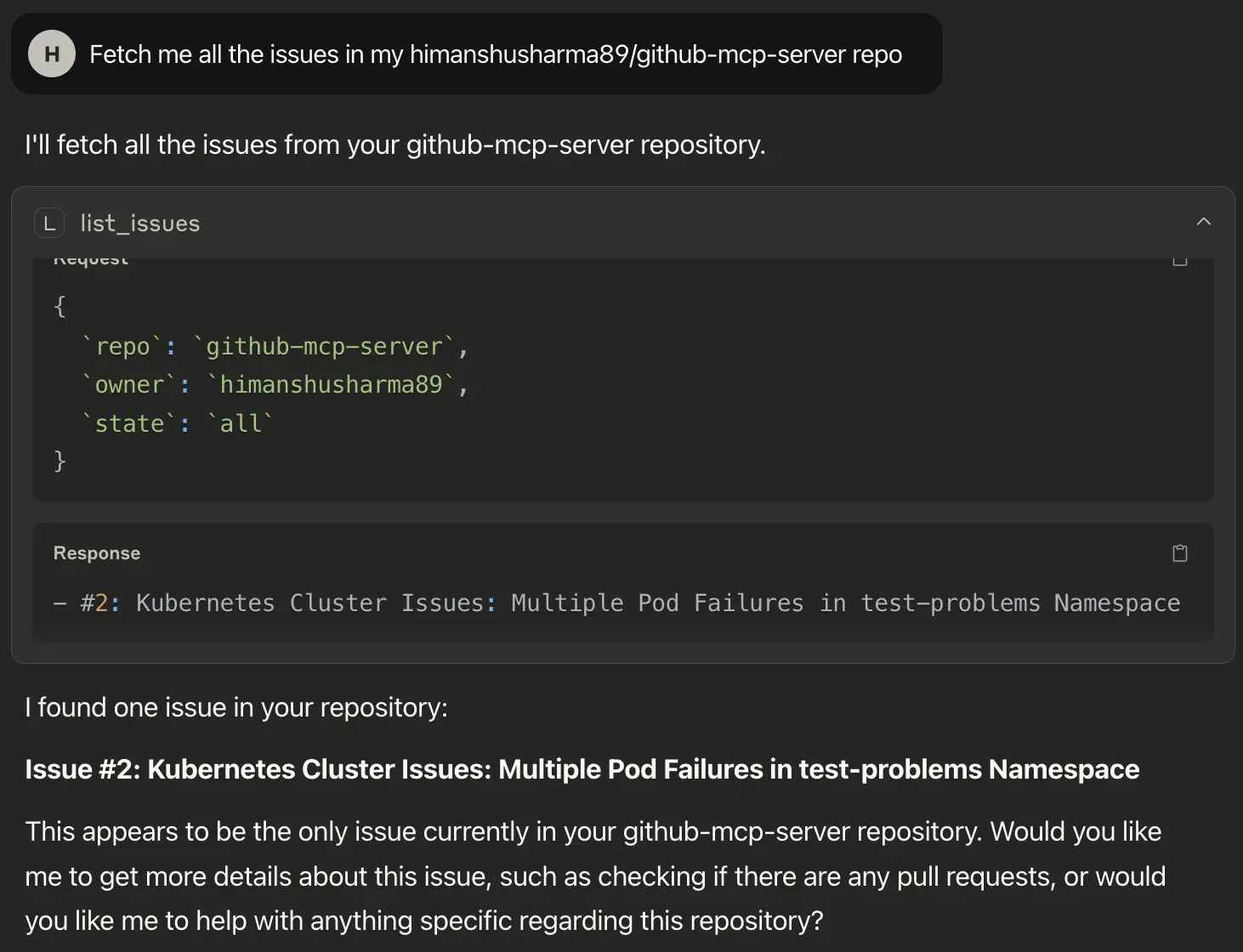

Here’s an example of the list_issues tool:

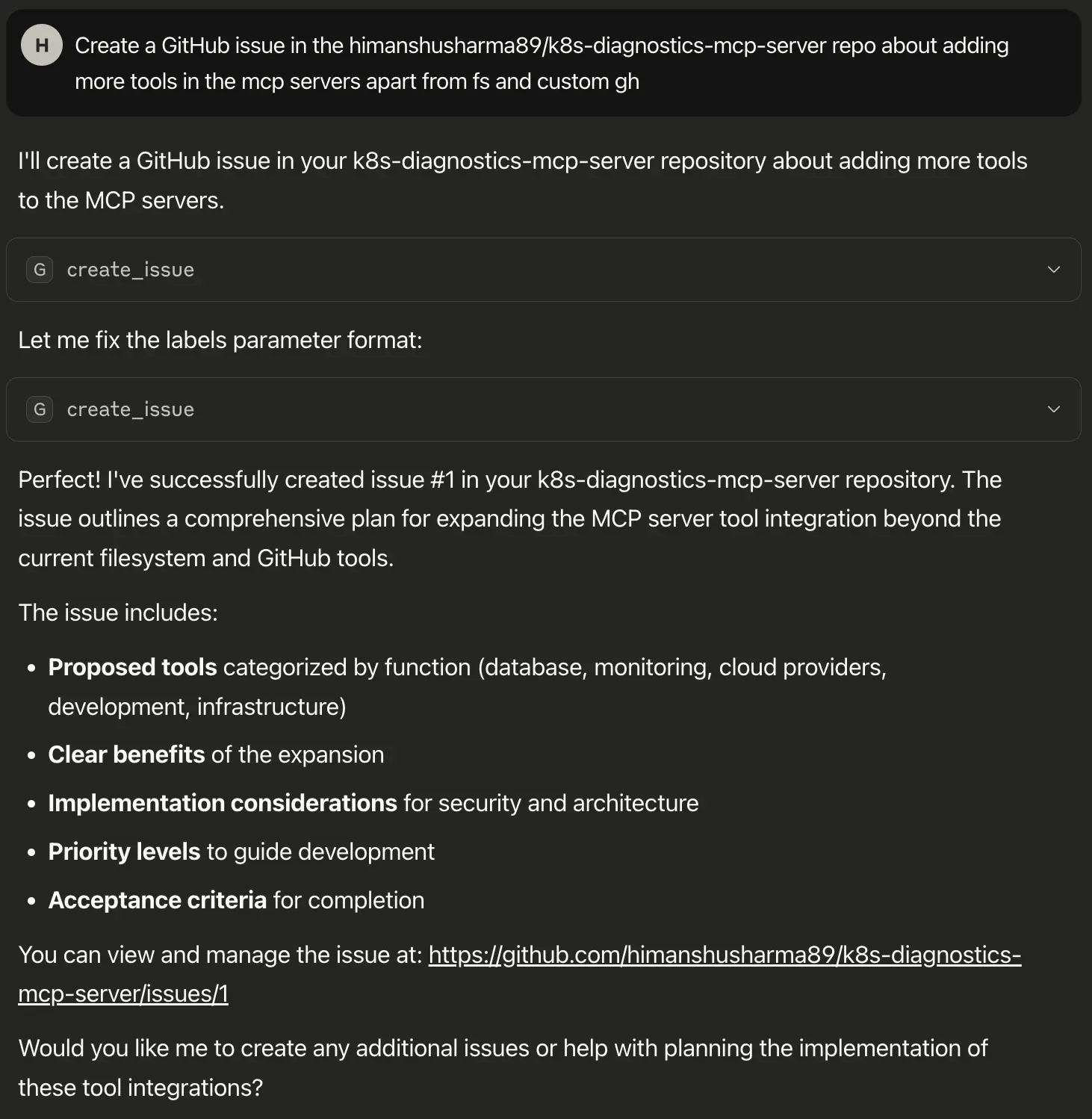

Here’s an example of create_issue tool:

The initial request generated following input:

{

"repo": "k8s-diagnostics-mcp-server",

"owner": "himanshusharma89",

"title": "Add support for additional MCP server tools beyond fs and custom GitHub integration",

"body": "...", // Full issue content omitted for brevity

"labels": "enhancement,mcp-integration,tooling"

}

And the server returned an error:

MCP error -32603: json: cannot unmarshal string into Go struct field ToolInput.labels of type []string

The labels field was expected to be a list of strings, but the LLM passed a single comma-separated string. The LLM understood the error and fixed it by changing the format in the request:

"labels": ["enhancement", "mcp-integration", "tooling"]

It retried the request, and this time, it succeeded.

✅ Issue created successfully!

- Number: #1

- Title: Add support for additional MCP server tools beyond fs and custom GitHub integration

- URL: https://github.com/himanshusharma89/k8s-diagnostics-mcp-server/issues/1

- State: open

This shows how the LLM can catch and fix common API mistakes on its own, making tool usage smoother for us.

Best practices and implementation patterns

Effective MCP server development requires adherence to proven patterns and practices that ensure reliability, maintainability, and security.

Best practices

Follow these practices for smooth operation:

- Validate early: Use input schemas to catch errors before execution.

- Keep it stateless: Makes testing, scaling, and debugging easier.

- Fail predictably: Use structured error responses that LLMs can interpret.

- Log meaningfully: Include context like tool name, duration, and errors.

- Secure by design: Use scoped credentials and avoid hardcoding secrets.

- Optimize APIs: Add caching or circuit breakers if working with rate-limited services.

Architecture patterns to explore

- Single-purpose server: One tool category per server (e.g., GitHub, Kubernetes).

- Multi-tool server: Exposes multiple related tools with shared logic.

- Scheduled execution: Trigger tools on a timer (e.g., hourly news summarizer).

- Nested tools: Tools that internally call others, support chaining logic.

- LLM orchestration: Use with agents like Obot to handle real-world automation.

- MCP tool chaining: Compose complex behavior from small tools (like Unix pipes, but for AI).

These patterns help design servers that scale with complexity while staying maintainable.

Closing thoughts

We explored the practical aspects of building production-ready MCP servers, from basic tool implementation to advanced architectural patterns. To continue your MCP journey, explore the complete implementation in our GitHub MCP Server repository.

The MCP is a significant step in standardizing LLM integration, providing a foundation for building robust, scalable AI applications. Resources like the mark3labs/mcp-go library and the awesome-mcp-servers repository are key parts of the community-driven ecosystem. The MCP specification is evolving to support features like stateful sessions and advanced chaining, enabling more sophisticated AI applications while maintaining simplicity. Emerging patterns such as tool composition and integration with agent frameworks like Obot highlight the potential for autonomous systems built on MCP.

By exploring implementations, experimenting, and engaging with the community, developers can master these patterns and contribute to building powerful, maintainable AI applications using standardized protocols.

To learn more about the latest in AI, subscribe to our AI-Xplore webinars. We hold regular webinars and host experts in AI to share their knowledge. If you need help building an AI cloud, our AI experts can help you. I hope you found this guide insightful. If you’d like to discuss MCP servers and LLMs further, feel free to connect with me on LinkedIn.

Stay updated with latest in AI and Cloud Native tech

We hate 😖 spam as much as you do! You're in a safe company.

Only delivering solid AI & cloud native content.